Abstract

Equipped with Large Language Models (LLMs), human-centered robots are now capable of performing a wide range of tasks that were previously deemed challenging or unattainable. However, merely completing tasks is insufficient for cognitive robots, who should learn and apply human preferences to future scenarios. In this work, we propose a framework that combines human preferences with physical constraints, requiring robots to complete tasks while considering both. Firstly, we developed a benchmark of everyday household activities, which are often evaluated based on specific preferences. We then introduced In-Context Learning from Human Feedback (ICLHF), where human feedback comes from direct instructions and adjustments made intentionally or unintentionally in daily life. Extensive sets of experiments, testing the ICLHF to generate task plans and balance physical constraints with preferences, have demonstrated the efficiency of our approach.

Approach

A Brief Overview of ICLHF Workflow

ICLHF algorithm includes five steps:

- Make initial plan with user's instruction and summarized preference.

- Three process for the preliminary planning of LLMs.

- Use POG, an algorithm for efficient sequential manipulation planning on the scene graph, to refine the details of the planning.

- Execute the plan and get physical feedback.

- Reflect human preference and update the plan.

A Simulated Demonstration Case

Results

Simulation Experiments

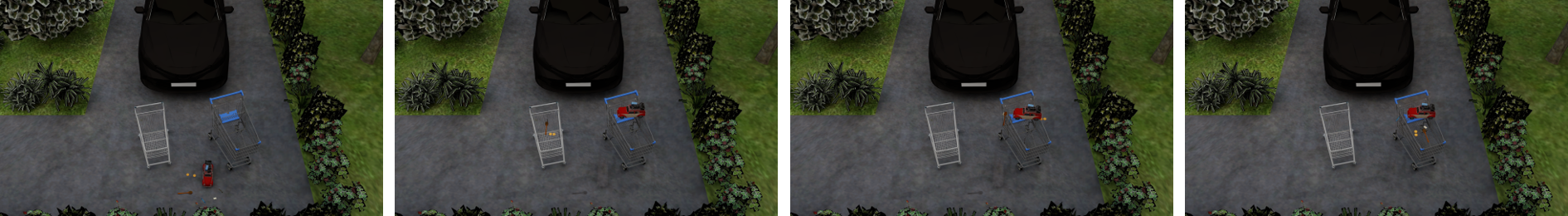

Unload car with human preference: "keep things in one place. I do not want to make two trips."

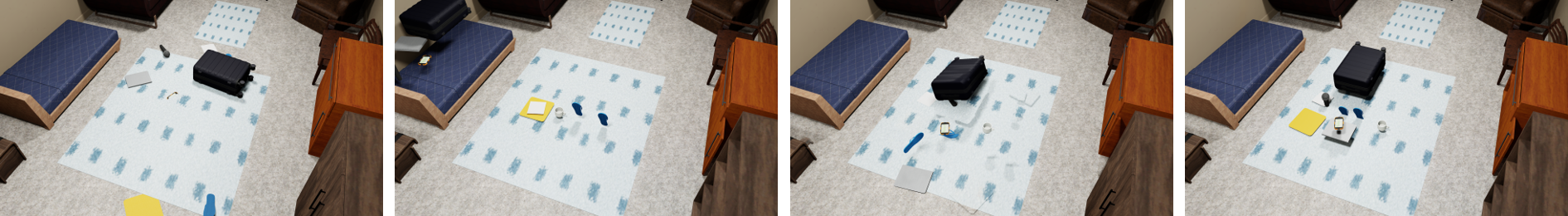

Unpack suitcase with human preference: "The bed is for sleeping, and I do not like things that have nothing to do with sleeping on the bed."

Clean room with human preference: "The bed is for sleeping, and I do not like things that have nothing to do with sleeping on the bed."

Tidy table with human preference: "I like that everything is laid out flat on the table, not stacked."

Tidy table with human preference: "I like that everything is laid out flat on the table, not stacked."

Tidy table with human preference: "I like that everything is laid out flat on the table, not stacked."

Clean room with all the human preference.

Real Robot Experiments

Poster

BibTeX

@inproceedings{li2025iclhf,

author={Li, Hongtao and Jiao, Ziyuan and Liu, Xiaofeng and Liu, Hangxin and Zheng, Zilong},

booktitle={2025 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

title={In-situ Value-aligned Human-Robot Interactions with Physical Constraints},

year={2025},

pages={9231--9238},

doi={10.1109/IROS60139.2025.11246572}

}